Our Goal: Create an Auto Scaling EC2 Group in a single Availability Zone and use a Custom CloudWatch metric to scale up (and down) our Web Server cluster behind an ELB.

This exercise will include the

Basic Auto Scaling scenario discussed early but now we will add a real Auto Scaling capability using a metric generated inside our application (like Apache Busy Workers). You have a post here about

creating custom metrics in CloudWatch. You can easily adapt that configuration to any other custom metric.

What we need for this exercise:

This exercise assumes you have previous experience with EC2 Instances, Security Groups, Custom AMIs and EC2 Load Balancers.

We need:

- An empty ELB.

- A custom AMI.

- A EC2 Keys Pair to use to access our instances.

- A EC2 Security Group.

- Auto Scaling API (

If you need help configuring the access to the Auto Scaling API check this post).

- A Apache HTTP server with mod_status module.

- A Script to collect the

mod_status value and store it into CloudWatch.

- A custom Test Web Page called "

/ping.html".

Preparation:

Is important to be sure that all the ingredients are working as expected. Auto Scaling could be difficult to debug and nasty situations may occur like: A group of instances starting while you are away or a new instance starting and stoping every 20 seconds with bad billing consequences (AWS will charge you a full hour for any started instance, despite it has been only one minute running).

I strongly suggest to manually test your components before create a Auto Scaling configuration.

- Create your Key Pair (In my example "juankeys").

- Deploy an ELB (In my example is named "elb-prueba") in your default AZ ("a"). Configure the ELB to use your custom

/ping.html page as

Instance Health Monitor. You should see something like this:

- Create a Security Group for your Web Server instances (In my example "wed-servers"). Add to this Security Group the

ELB Security Group for Port 80. It should look like the capture below. In this example this SG allows to Ping and TCP access from my home to the Instances AND allows access to port 80 to the connections originated in my Load Balancers (

amazon-elb-sg). The Web Server port 80

is not open to Internet, is only open to the ELB.

- Deploy a EC2 Instance using the previous created Key Pair and Security Group. Install a Apache HTTP server and be sure it is configured

to start automatically. Create a Test Page called

/ping.html at the web sever root folder. This text page can print out ant text you like. Its only mission is to be present. A HTTP 200 is OK and anything else is KO.

- In this exercise we will add to our custom Linux AMI a script and a

crontab configuration to create a Custom CloudWatch Metric.

We will use what we've learned in this previous post.

Once you have the Apache HTTP server installed and

mod_status configured following that previous post instructions, copy this new script version:

#!/bin/bash

logger "Apache Status Started"

export AWS_CREDENTIAL_FILE=/opt/aws/apitools/mon/credential-file-path.template

export AWS_CLOUDWATCH_HOME=/opt/aws/apitools/mon

export AWS_IAM_HOME=/opt/aws/apitools/iam

export AWS_PATH=/opt/aws

export AWS_AUTO_SCALING_HOME=/opt/aws/apitools/as

export AWS_ELB_HOME=/opt/aws/apitools/elb

export AWS_RDS_HOME=/opt/aws/apitools/rds

export EC2_AMITOOL_HOME=/opt/aws/amitools/ec2

export EC2_HOME=/opt/aws/apitools/ec2

export JAVA_HOME=/usr/lib/jvm/jre

export PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/opt/aws/bin:/root/bin

SERVER=`wget -q -O - http://169.254.169.254/latest/meta-data/instance-id`

ASGROUPNAME="grupo-prueba"

BUSYWORKERS=`wget -q -O - http://localhost/server-status?auto | grep BusyWorkers | awk '{ print $2 }'`

/opt/aws/bin/mon-put-data --metric-name httpd-busyworkers --namespace "AS:$ASGROUPNAME" --unit Count --value $BUSYWORKERS

logger "Apache Status Ended with $SERVER $BUSYWORKERS"

|

It is similar to the one used

before but now we collect just one metric (instead of two) and we store it under a common CloudWatch Name Space. All instances involved in this Auto Scaling exercise will store its Busy Workers values

under the same Name Space and Metric Name. In my example the Name Space will be "AS:grupoprueba" and the Metric Name "httpd-busyworkers".

- Create a crontab configuration to execute this script every 5 minutes.

- Create your Custom AMI from the previous created temporal instance. Terminate the previous created temporal instance when finished.

- Deploy a new instance using the recently created AMI (In my example "ami-0e5ee467") to test the Apache server and the script. Check if the HTTP Server starts automatically.

- Manually add the recently created instance under the ELB. Verify that the Load Balancer Check works and it gives you the Status "In Service" for this instance. Verify that the

/ping.html page can be accessed from Internet using a browser and the ELB public DNS name ("

http://(you-ELB-DNS-name)/ping.html").

- Verify that the script executes every 5 minutes (

following the previous instructions) and that CloudWatch is storing the new metric. You could either check that using CloudWatch console or using command line:

# mon-get-stats --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --statistics Average

2012-11-05 15:15:00 5.0 Count

2012-11-05 15:25:00 5.0 Count

2012-11-05 15:35:00 2.0 Count

2012-11-05 15:40:00 5.0 Count

2012-11-05 15:45:00 5.0 Count |

- Once everything is checked, remove the instance from the ELB and Terminate the instance.

Definition:

# as-create-launch-config config-prueba --image-id ami-0e5ee467 --instance-type t1.micro --monitoring-disabled --group web-servers --key juankeys

OK-Created launch config |

# as-create-auto-scaling-group grupo-prueba --launch-configuration config-prueba --availability-zones us-east-1a --min-size 0 --max-size 4 --load-balancers elb-prueba --health-check-type ELB --grace-period 120

OK-Created AutoScalingGroup |

With

as-create-launch-config we define the Instance configuration we will be using in our Auto Scaling Group: Launch config name, AMI ID, Intance Type, Advanced Monitoring (1 minute monitoring) disabled, Security Group and Key Pair to use.

With

as-create-auto-scaling-group we define the group itself: Group Name, Launch Confing to use, AZs to deploy in, the minimum number of running instances that our application needs to run, the maximum number of instances we desire to scale up to, ELB name, the Health Check type set to ELB (by default is the EC2 System Status) and the grace period of time grant to a instance before is checked after launch (in seconds).

# as-put-scaling-policy scale-up-prueba --auto-scaling-group grupo-prueba --adjustment=1 --type ChangeInCapacity --cooldown 300

arn:aws:autoscaling:us-east-1:085366056805:scalingPolicy:36101053-f0f3-4c7c-bc4c-60a8a2a943a1:autoScalingGroupName/grupo-prueba:policyName/scale-up-prueba |

With

as-put-scaling-policy we create a Policy called "scale-up-prueba" for the previous created AS Group. When triggered it will increase the AS in one unit (one instance). No other AS activities for this Group are allowed until 300 seconds passes. After this successful API call a ARN identifier is returned. Save it because we will need it for the Alarm definition.

# mon-put-metric-alarm scale-up-alarm --comparison-operator GreaterThanThreshold --evaluation-periods 1 --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --period 600 --statistic Average --threshold 10 --alarm-actions arn:aws:autoscaling:us-east-1:085366056805:scalingPolicy:36101053-f0f3-4c7c-bc4c-60a8a2a943a1:autoScalingGroupName/grupo-prueba:policyName/scale-up-prueba

OK-Created Alarm |

With

mon-put-metric-alarm we create a new CloudWatch alarm called "scale-up-alarm" that will be triggered when the last 10 minutes average of all the values of "httpd-busymetrics" is bigger than 10. Then the scale up policy will be executed through the ARN identifier. In this example, each Apache server with no external load has an average of 5 busyworkers so a good way to test it is to define a threshold of 10 to increase our cluster capacity. In a real world configuration those values will be very different and you have to tune them to mach your application.

# as-put-scaling-policy scale-down-prueba --auto-scaling-group grupo-prueba --adjustment=-1 --type ChangeInCapacity --cooldown 300

arn:aws:autoscaling:us-east-1:085366056805:scalingPolicy:0763114c-f1d3-4f35-a9c5-56c2a7466073:autoScalingGroupName/grupo-prueba:policyName/scale-down-prueba |

Now we've created the Policy to be executed when capacity of the AS Group needs to be reduced. And a new ARN identifier is received.

# mon-put-metric-alarm scale-down-alarm --comparison-operator LessThanThreshold --evaluation-periods 1 --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --period 600 --statistic Average --threshold 9 --alarm-actions arn:aws:autoscaling:us-east-1:085366056805:scalingPolicy:0763114c-f1d3-4f35-a9c5-56c2a7466073:autoScalingGroupName/grupo-prueba:policyName/scale-down-prueba

OK-Created Alarm |

The same way we did before with the scale up alarm, we create a new one to trigger the down scale process. The configuration is the same but now the threshold is 9 Apache busy workers after 10 o more minutes.

Note: By default all the API calls are sent to the us-east-1 Region (N.Virginia).

Describe:

# as-describe-launch-configs --headers

LAUNCH-CONFIG NAME IMAGE-ID TYPE

LAUNCH-CONFIG config-prueba ami-0e5ee467 t1.micro |

# as-describe-auto-scaling-groups --headers

AUTO-SCALING-GROUP GROUP-NAME LAUNCH-CONFIG AVAILABILITY-ZONES LOAD-BALANCERS MIN-SIZE MAX-SIZE DESIRED-CAPACITY TERMINATION-POLICIES

AUTO-SCALING-GROUP grupo-prueba config-prueba us-east-1a elb-prueba 0 4 0 Default

|

We use "as-describe-" commands to read the result of our last configuration. Special attention to as-describe-auto-scaling-instances:

# as-describe-auto-scaling-instances --headers

No instances found |

This command give us quick look to the running instances within our AS Groups. This is very useful when dealing with AS to find out the amount of instances running and its state. Now the result is "No instances found" and this is correct. Our current configuration says that zero is the minimum healthy instances our application needs to work.

We can describe the recently created alarms with

mon-describe-alarms:

# mon-describe-alarms --headers

ALARM STATE ALARM_ACTIONS NAMESPACE METRIC_NAME PERIOD STATISTIC EVAL_PERIODS COMPARISON THRESHOLD

scale-down-alarm ALARM arn:aws:autoscalin...6056805:AutoScaling AS:grupo-prueba httpd-busyworkers 600 Average 1 LessThanThreshold 9.0

scale-up-alarm OK arn:aws:sns:us-eas...ame/scale-up-prueba AS:grupo-prueba httpd-busyworkers 600 Average 1 GreaterThanThreshold 10.0 |

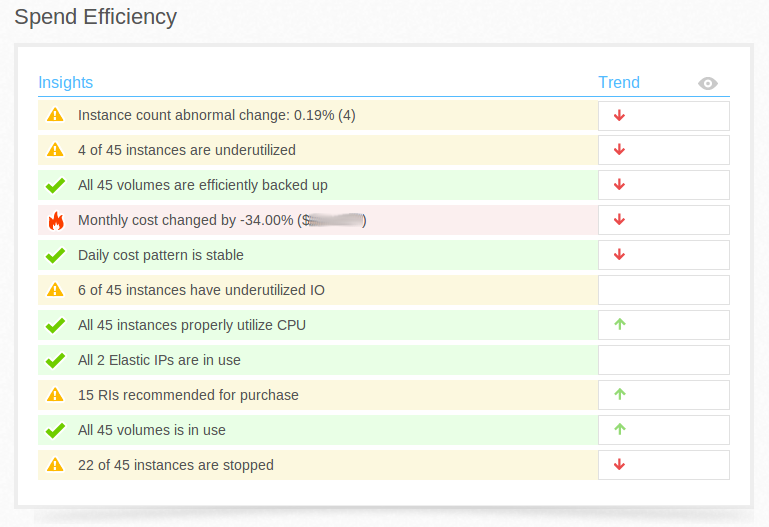

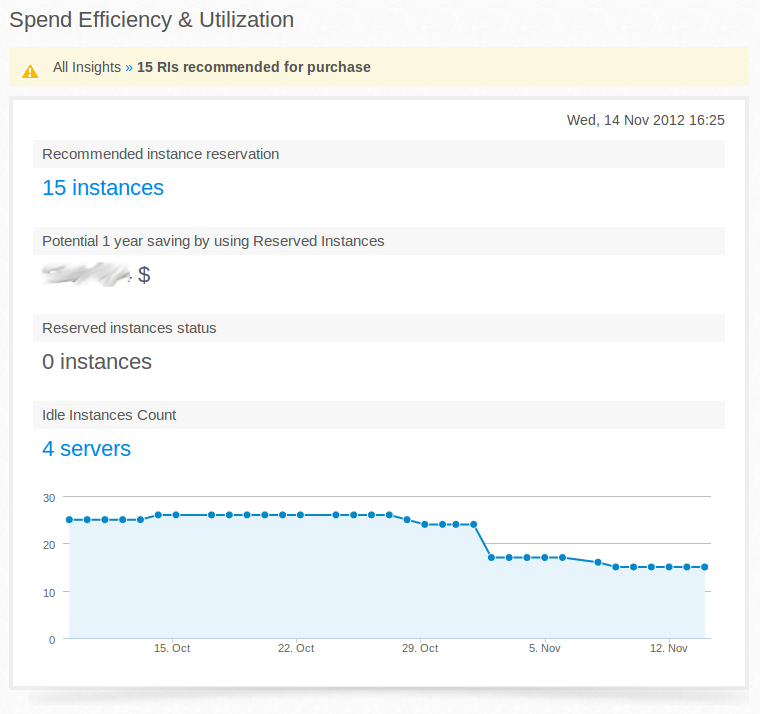

Or using the CloudWatch Console:

Under normal circumstances, the "scale-down-alarm" will have the state "Alarm" and this is normal.

Using CloudWatch Console you can add to this alarms and action to send an Email notification to obtain better visibility during the test.

Bring it to Production:

Now the cluster is idle, no instances running. So now we will tell to AS that our application requires a minimum of 1 healthy instance to run:

# as-update-auto-scaling-group grupo-prueba --min-size 1

OK-Updated AutoScalingGroup

# as-describe-auto-scaling-groups --headers

AUTO-SCALING-GROUP GROUP-NAME LAUNCH-CONFIG AVAILABILITY-ZONES LOAD-BALANCERS MIN-SIZE MAX-SIZE DESIRED-CAPACITY TERMINATION-POLICIES

AUTO-SCALING-GROUP grupo-prueba config-prueba us-east-1a elb-prueba 1 4 1 Default

INSTANCE INSTANCE-ID AVAILABILITY-ZONE STATE STATUS LAUNCH-CONFIG

INSTANCE i-9d022be1 us-east-1a Pending Healthy config-prueba |

Notice that now Minimum is 1 in the AS configuration and now there is a new instance under our AS Group ("i-9d022be1" in this example). This instance has been automatically deployed by AS to match the desired number of healthy instances for our application. Notice the "Pending" status that means that it is still in the initialization process. We can follow this process with

as-describe-auto-scaling-instances:

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a Pending HEALTHY config-prueba

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a Pending HEALTHY config-prueba

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a Pending HEALTHY config-prueba

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a InService HEALTHY config-prueba

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a InService HEALTHY config-prueba |

Now the recently launched instance is in service. That means that its Health Check (ELB ping.html test page) verifies OK. If you open the AWS Console and read the current ELB "Instances Tab", the new instance ID should be there, automatically added to the Load Balancer and your application up and running.

Common problem scenarios:

- If you observe that the new instances are constantly Launched and Terminated by AS this probably means that

/ping.html page fails. Stop the experiment with "

as-update-auto-scaling-group grupo-prueba --min-size 0" and verify your components.

- If your web server and test page verify OK but the AS is still Deploying and Terminating the instances without a chance to rise to the Healthy status then you should increase the value of "

--grace-period" in the AS Group definition to give more time to your AMI to start a initialize its services.

- If the instances start but they fail to automatically be added to the ELB then probably the Instances are deployed in a incorrect Availability Zone. Either correct your AS Launch Configuration or expand the ELB to the rest of AZs in your Region.

Force to Scale UP:

To test the AS Policy we can lie to CloudWatch and tell it that we have much more load than we really have. We will inject a false amount of Busy Workers to the CW Metric:

# mon-put-data --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --unit Count --value 20

# mon-put-data --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --unit Count --value 20

# mon-put-data --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --unit Count --value 20

# mon-get-stats --metric-name httpd-busyworkers --namespace "AS:grupo-prueba" --statistics Average

2012-11-05 15:35:00 2.0 Count

2012-11-05 15:40:00 5.0 Count

2012-11-05 15:45:00 5.0 Count

2012-11-05 15:50:00 5.0 Count

2012-11-05 15:55:00 5.0 Count

2012-11-05 16:00:00 2.0 Count

2012-11-05 16:15:00 5.0 Count

2012-11-05 16:20:00 5.0 Count

2012-11-05 16:21:00 20.0 Count

2012-11-05 16:23:00 20.0 Count

|

And after a while, the average Busy Workers value rises and this triggers the scale up Alarm and then its AS Policy:

# as-describe-scaling-activities --headers --show-long view

ACTIVITY,135c95fa-8d67-4664-85e4-5d78dfb73353,2012-11-05T16:25:13Z,grupo-prueba,Successful,(nil),"At 2012-11-05T16:24:14Z a monitor alarm scale-up-alarm in state ALARM triggered policy scale-up-prueba changing the desired capacity from 1 to 2. At 2012-11-05T16:24:27Z an instance was started in response to a difference between desired and actual capacity, increasing the capacity from 1 to 2.",100,Launching a new EC2 instance: i-ebeac397,(nil),2012-11-05T16:24:27.687Z

|

And a second instance automatically is launched:

# as-describe-auto-scaling-instances

INSTANCE i-9d022be1 grupo-prueba us-east-1a InService HEALTHY config-prueba

INSTANCE i-ebeac397 grupo-prueba us-east-1a InService HEALTHY config-prueba |

If we keep feeding CloudWatch with fake values and we keep the average high, soon a third instance will be launched:

# as-describe-scaling-activities --headers --show-long view

ACTIVITY,ACTIVITY-ID,END-TIME,GROUP-NAME,CODE,MESSAGE,CAUSE,PROGRESS,DESCRIPTION,UPDATE-TIME,START-TIME

ACTIVITY,ef187965-9a79-463f-8a2d-b6f413cc9226,2012-11-05T16:31:11Z,grupo-prueba,Successful,(nil),"At 2012-11-05T16:30:14Z a monitor alarm scale-up-alarm in state ALARM triggered policy scale-up-prueba changing the desired capacity from 2 to 3. At 2012-11-05T16:30:30Z an instance was started in response to a difference between desired and actual capacity, increasing the capacity from 2 to 3.",100,Launching a new EC2 instance: i-99e4cde5,(nil),2012-11-05T16:30:30.795Z

# as-describe-auto-scaling-instances

INSTANCE i-99e4cde5 grupo-prueba us-east-1a InService HEALTHY config-prueba

INSTANCE i-9d022be1 grupo-prueba us-east-1a InService HEALTHY config-prueba

INSTANCE i-ebeac397 grupo-prueba us-east-1a InService HEALTHY config-prueba |

If we leave it alone for a while, the average will decrease and the automatically launched instances will be terminated with a 10 minutes interval:

# as-describe-auto-scaling-instances

INSTANCE i-99e4cde5 grupo-prueba us-east-1a InService HEALTHY config-prueba

INSTANCE i-9d022be1 grupo-prueba us-east-1a Terminating HEALTHY config-prueba

INSTANCE i-ebeac397 grupo-prueba us-east-1a InService HEALTHY config-prueba

# as-describe-scaling-activities --headers --show-long view

ACTIVITY,ACTIVITY-ID,END-TIME,GROUP-NAME,CODE,MESSAGE,CAUSE,PROGRESS,DESCRIPTION,UPDATE-TIME,START-TIME

ACTIVITY,7095a10e-d7b7-4e68-a1c9-cb350e8b0d45,2012-11-05T16:45:03Z,grupo-prueba,Successful,(nil),"At 2012-11-05T16:43:48Z a monitor alarm scale-down-alarm in state ALARM triggered policy scale-down-prueba changing the desired capacity from 3 to 2. At 2012-11-05T16:44:04Z an instance was taken out of service in response to a difference between desired and actual capacity, shrinking the capacity from 3 to 2. At 2012-11-05T16:44:04Z instance i-9d022be1 was selected for termination.",100,Terminating EC2 instance: i-9d022be1,(nil),2012-11-05T16:44:04.106Z

# as-describe-auto-scaling-instances

INSTANCE i-99e4cde5 grupo-prueba us-east-1a InService HEALTHY config-prueba

INSTANCE i-ebeac397 grupo-prueba us-east-1a InService HEALTHY config-prueba

# as-describe-scaling-activities --headers --show-long view

ACTIVITY,ACTIVITY-ID,END-TIME,GROUP-NAME,CODE,MESSAGE,CAUSE,PROGRESS,DESCRIPTION,UPDATE-TIME,START-TIME

ACTIVITY,31e8673e-7255-410e-b8a7-51ee677f2bb8,(nil),grupo-prueba,InProgress,(nil),"At 2012-11-05T16:50:23Z a monitor alarm scale-down-alarm in state ALARM triggered policy scale-down-prueba changing the desired capacity from 2 to 1. At 2012-11-05T16:50:35Z an instance was taken out of service in response to a difference between desired and actual capacity, shrinking the capacity from 2 to 1. At 2012-11-05T16:50:35Z instance i-ebeac397 was selected for termination.",50,Terminating EC2 instance: i-ebeac397,(nil),2012-11-05T16:50:35.538Z

# as-describe-auto-scaling-instances

INSTANCE i-99e4cde5 grupo-prueba us-east-1a InService HEALTHY config-prueba

|

We have learned something here:

An instance in an AS environment is volatile. It could disappear at any time because it is Terminated and with the instance its EBS volumes. You have to take that into account when designing your infrastructure. If your web server needs to store some information that you could need later you should save it elsewhere: Cloudwatch, external log server, data base, etc.

Also notice that the survived instance is the i-99e4cde5. This is the last one that was deployed. And the first one to be terminated during the shrinking process was the first member of the group. Auto Scaling uses that logic to help you to get more value for your money. EC2 bills you the full hour, so leaving alive the last launched instance gives you a chance to use what you've already payed for.

Average of what?

The Policy used in this example is not a perfect method and this Average Metric is a bit confusing. First we have to know that the

Average CPU used in the official documentation for Auto Scaling is a native CloudWatch metric. It is automatically created when you define your AS Group. EC2 takes the CPU usage of all Instances in your AS Group and store there the Average value (It does the same with other EC2 metrics: CW Console -> All Metrics pull-down menu -> "EC2: Aggregated by Auto Scaling Group"). An elegant method could be do the same kind of aggregation but with our custom metric, but I don't know how to do that. So, what we have is a single metric name receiving all those different values from our cluster members. Then is important that all those members send that information in a timely fashion to not distort the average calculation. I think that a "

crontab */5 * * * *" is a good solution but I'm quite open to other suggestions.

The ELB role:

By default the Load Balancer will send an equal amount of connection to the web cluster members and therefore the amount of Apache Busy Workers will remain "balanced" among the cluster. The configuration described here is not useful when using "sticky sessions". If a web server increases its connections above the other cluster members, could trigger an unnecessary scale-up action.

Cleaning:

You don't want an AS Group doing things while you sleep so I suggest you to delete all your AS configurations after your test is done.

# as-update-auto-scaling-group grupo-prueba --min-size 0

OK-Updated AutoScalingGroup

# as-update-auto-scaling-group grupo-prueba --desired-capacity 0

OK-Updated AutoScalingGroup

# as-delete-auto-scaling-group grupo-prueba

Are you sure you want to delete this AutoScalingGroup? [Ny]y

OK-Deleted AutoScalingGroup

# as-delete-launch-config config-prueba

Are you sure you want to delete this launch configuration? [Ny]y

OK-Deleted launch configuration

# as-describe-auto-scaling-instances

No instances found |