Disclaimer: Modifying security credentials could render in loosing access to your server in case of problems. I strongly suggest you test the method described here in your Development environment before using it in Production.

Key-Pairs is the standard method to authenticate SSH access to our EC2 Instances based on AWS AMI Linux. We can easily create new Key-Paris for our team using the

ssh-keygen command and manually adding them to the file

/home/ec2-user/.ssh/authorized_keys for those with root access.

Format:

/home/ec2-user/.ssh/authorized_keys

ssh-rsa AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA== main-key

ssh-rsa BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB== juan

ssh-rsa CCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC

CCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC== pedro

ssh-rsa DDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDD

DDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDD== luis

|

But when the number of instances and members grows we need a centralized method of distribution of this file.

Goal

- Store an

authorized_keys file in S3 encrypted "

at-rest".

- Transport this file from S3 to the instance securely.

- Give access to this file only to the right instances.

- Do not store any API Access Keys into the involved script.

- Store all the temporary files in RAM.

S3

- Create a bucket. In this example is "tarro".

- Create in your local an

authorized_keys file and upload it to the new bucket.

- Select the file properties with S3 Console and select

Server Side Encryption = AES256 and Save.

- Calculate the MD5 of the file with md5sum.

Example:

$ md5sum authorized_keys

690f9d901801849f6f54eced2b2d1849 authorized_keys |

- Create a file called

authorized_keys.md5, copy the

md5sum result in (

only the hexadecimal string of numbers and letters) and upload it to the same S3 bucket.

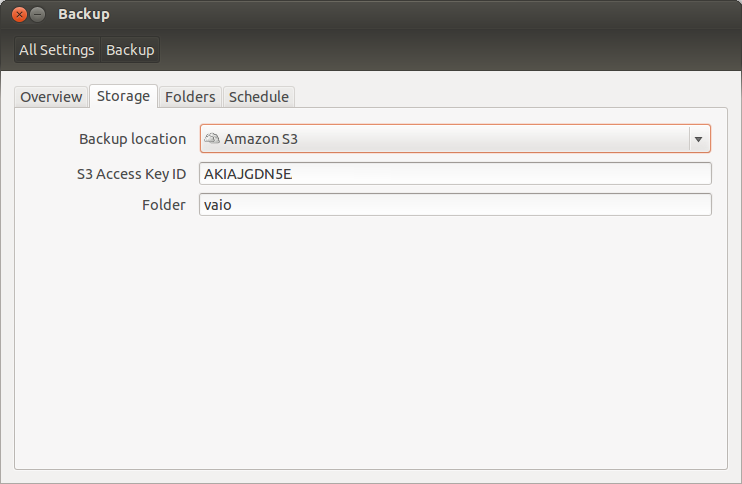

IAM

We will use an

EC2 IAM instance role. This way we don't need to store a copy of our API Access Key into the instances who will be accessing the secured files.

AWS Command Line Interface (

AWS CLI)

will automatically access to the EC2 Instance Metadata and retrieve a temporary security credential needed to connect to S3. We will specify a role policy to grant read access to the bucket that contains those files.

- Create a role using the IAM Console. In my example is "demo-role".

- Select Role Type = Amazon EC2.

- Select Custom Policy.

- Create a role policy to grant read access only to "tarro" bucket. Example:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Sid": "Stmt1380102067000",

"Resource": [

"arn:aws:s3:::tarro/*"

],

"Effect": "Allow"

}

]

} |

EC2

We will use

Amazon Linux AMI 2013.09 which includes

AWS CLI.

- Launch your instance as you usually do but now select the

IAM Role and choose the appropriate one. In my example is "demo-role" but you could have different roles for every application tier like: web servers, data bases, test, etc.

- Under root, create /root/bin/

- In /root/bin/ create the file deploy-keys.sh with the following content:

#!/bin/bash

#

# /root/bin/deploy-keys.sh

# Install centralized authorized_keys file from S3 securely using temporary security credentials

# blog.domenech.org

#

### User defined variables

BUCKET="tarro"

TMPFOLDER="/media/tmpfs/"

# (Finish TMPFOLDER variable with slash)

# Note:

# The temporary folder in RAM size is 1 Megabyte.

# If you are planning to deal with files bigger than that you have to change line #24 accordingly

# Create temporary folder

if [ ! -e $TMPFOLDER ]

then

mkdir $TMPFOLDER

fi

# Mount temporary folder in RAM

mount -t tmpfs -o size=1M,mode=700 tmpfs $TMPFOLDER

# Get-Object from S3

COMMAND=`aws s3api get-object --bucket $BUCKET --key "authorized_keys" $TMPFOLDER"authorized_keys"`

if [ ! $? -eq 0 ]

then

umount $TMPFOLDER

logger "deploy-keys.sh: aws s3api get-object authorized_keys failed! Exiting..."

exit 1

fi

# Get-Object from S3 (MD5)

COMMAND=`aws s3api get-object --bucket $BUCKET --key "authorized_keys.md5" $TMPFOLDER"authorized_keys.md5"`

if [ ! $? -eq 0 ]

then

umount $TMPFOLDER

logger "deploy-keys.sh: aws s3api get-object authorized_keys.md5 failed! Exiting..."

exit 1

fi

# Check MD5, copy the new file if matches and clean up

MD5=`cat $TMPFOLDER"authorized_keys.md5"`

MD5NOW=`md5sum $TMPFOLDER"authorized_keys" | awk '{print $1}'`

if [ $MD5 == $MD5NOW ]

then

mv --update /home/ec2-user/.ssh/authorized_keys /home/ec2-user/.ssh/authorized_keys.original

cp --force $TMPFOLDER"authorized_keys" /home/ec2-user/.ssh/authorized_keys

chown ec2-user:ec2-user /home/ec2-user/.ssh/authorized_keys

chmod go-rwx /home/ec2-user/.ssh/authorized_keys

# The unmount command will delete all the files in RAM but we are extra cautious here shredding and removing

shred $TMPFOLDER"authorized_keys"; shred $TMPFOLDER"authorized_keys.md5"

rm $TMPFOLDER"authorized_keys"; rm $TMPFOLDER"authorized_keys.md5"; umount $TMPFOLDER

logger "deploy-keys.sh: Keys updated successfully."

exit 0

else

shred $TMPFOLDER"authorized_keys"; shred $TMPFOLDER"authorized_keys.md5"

rm $TMPFOLDER"authorized_keys"; rm $TMPFOLDER"authorized_keys.md5"; umount $TMPFOLDER

logger "deploy-keys.sh: MD5 check failed! Exiting..."

exit 1

fi

|

- And give execution permissions

to root and remove unnecessary

Read/Write permissions.

OR you can do it all at once more easily executing this command:

| mkdir /root/bin/; cd /root/bin/; wget -q http://www.domenech.org/files/deploy-keys.sh; chmod u+x deploy-keys.sh; chmod go-rwx deploy-keys.sh; chown root:root deploy-keys.sh |

and test the script. You can check the script results at

/var/log/messages

- Trigger after reboot the script by adding the line

/root/bin/deploy-keys.sh at the system init script

/etc/rc.local

#!/bin/sh

#

# This script will be executed *after* all the other init scripts.

# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff.

touch /var/lock/subsys/local

/root/bin/deploy-keys.sh |